I remember during my student days, I was managing the department’s server which ran on the Windows Server operating system. For deploying websites, I used IIS, and for development, I utilized C# and ASP. I vividly recall frequently visiting the server room for various operations since remote uploading and deployment were not very reliable back then.

With technological advancements, I started using WordPress for my website because it offered quick deployment, automation, and remote publishing capabilities. Later, as static websites became popular, I switched to using Hugo and other static site generators, which helped in speed optimization and establishing the concept of version control.

Now, I have returned to using WordPress, and I plan to summarize my journey in web development.

Getting to the main topic, deploying applications has become simpler, more reliable, and scalable with the advent of Containers. Installing containers on a self-purchased server is straightforward. For instance, Docker‘s official website provides detailed tutorials for different systems, like CentOS at https://docs.docker.com/engine/install/centos/.

Today, I am using Docker to deploy LobeChat. Nowadays, AI models’ middleware operates much like front-end framework templates, managing API calls, prompt transfers, and agent aggregations within a single program for distribution. For detailed information about LobeChat, the official website is the most updated source, and you can check it at github README. Here are the key features that I find appealing:

- Supports the latest modalities, including comprehensive image and voice interfaces.

- User-friendly for non-AI industries, ordinary developers, and users, allowing the creation of detailed prompts using existing knowledge.

- Extensible, as it’s open-source with developer-friendly APIs, offering playability for seasoned developers. As a user, I can gradually learn how to develop more customized features.

- User-friendly interface, though this is becoming more homogeneous.

- Supports PWA, making the app interface and usability closer to native applications.

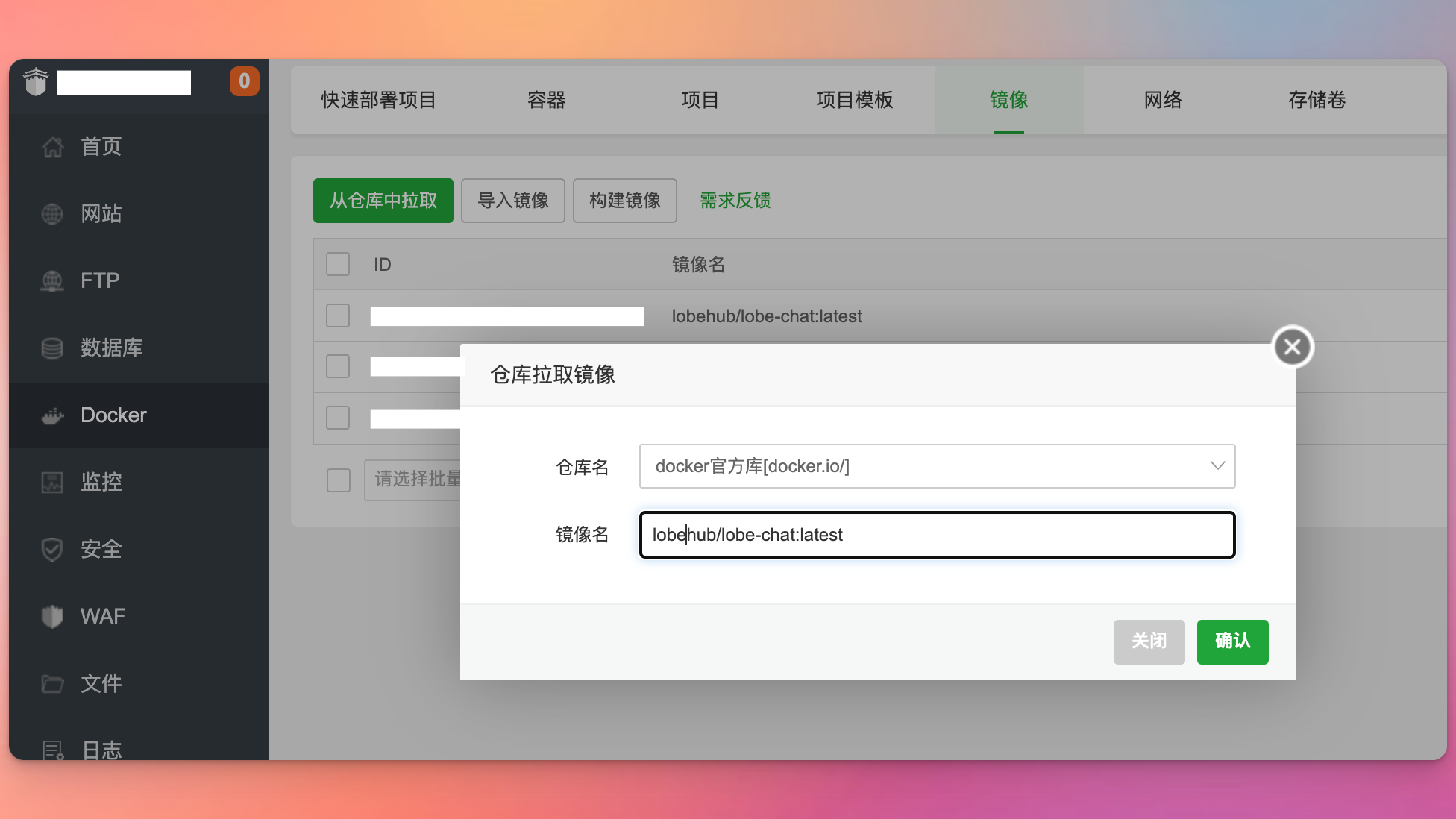

Obtaining the Docker Image

The following command can be used to pull the official image from https://hub.docker.com/r/lobehub/lobe-chat:

sudo docker pull lobehub/lobe-chat:latest

If you have installed a Docker management visual interface, like Baota, you can directly operate it through the Web UI.

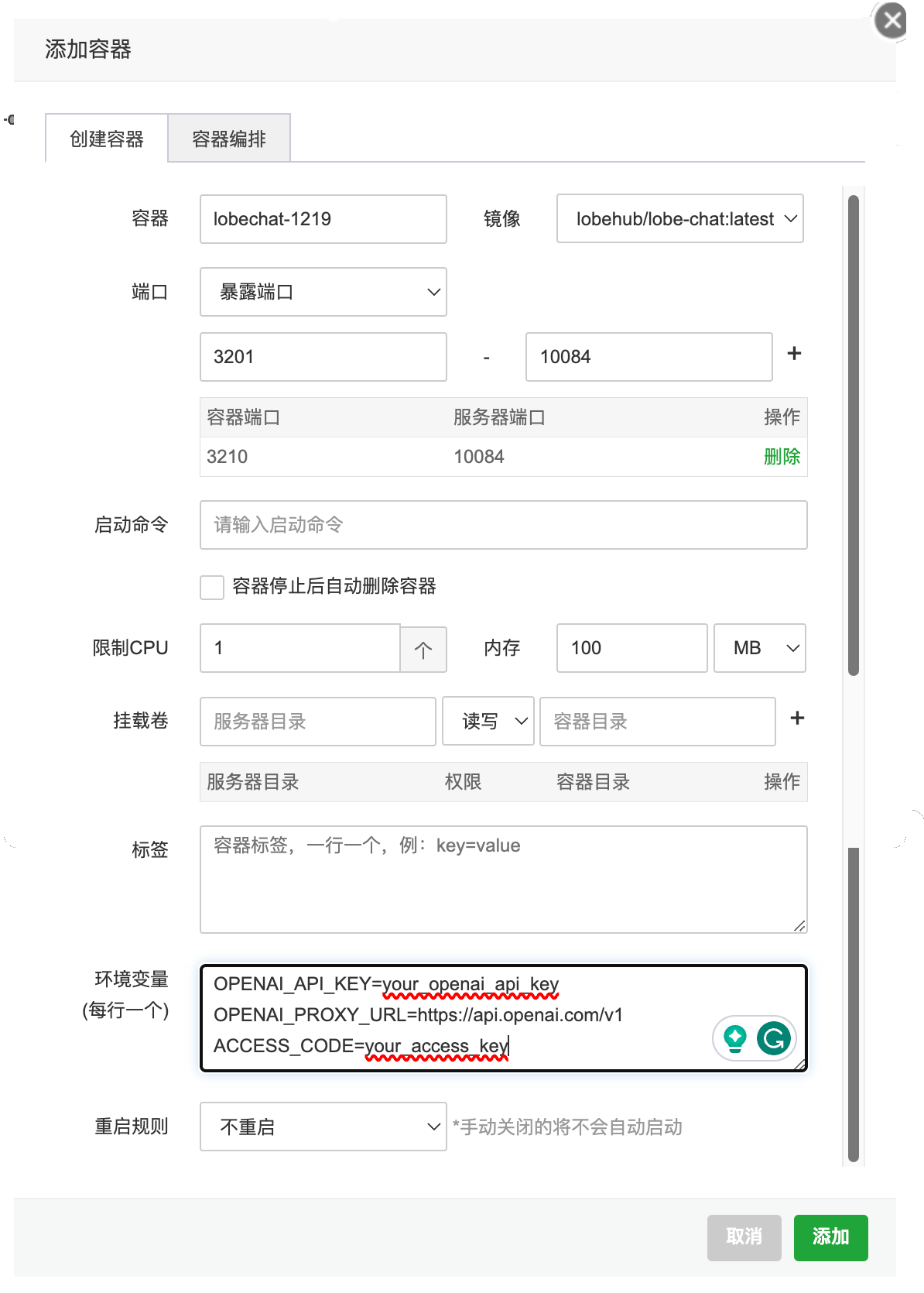

Running the Container

Use the docker command to run the recently acquired image.

$ sudo docker run -d \

--name lobe-chat \

-p 10084:3210 \

-e OPENAI_API_KEY=your_openai_api_key \

-e OPENAI_PROXY_URL=https://api.openai.com/v1 \

-e ACCESS_CODE=your_access_key \

lobehub/lobe-chat:latest-d(run the container in the background)--name(name the container for easy management)-p 10084:3210(map the container’s 3210 port to the host’s 10084 port, specifying the TCP protocol)-e OPENAI_API_KEY=your_openai_api_key(OpenAI API Key)-e OPENAI_PROXY_URL=https://api.openai.com/v1(OpenAI interface proxy, default is the official interface)-e ACCESS_CODE=your_access_key(password to access LobeChat services)

Reverse Proxy

If you have a subdomain, such as chat.icsteve.com, you can set up a reverse proxy so that https://chat.icsteve.com will direct to your self-deployed LobeChat.

ProxyPass / http://127.0.0.1:10084/

ProxyPassReverse / http://127.0.0.1:10084/Now, you can access LobeChat services through your domain name or IP and port.

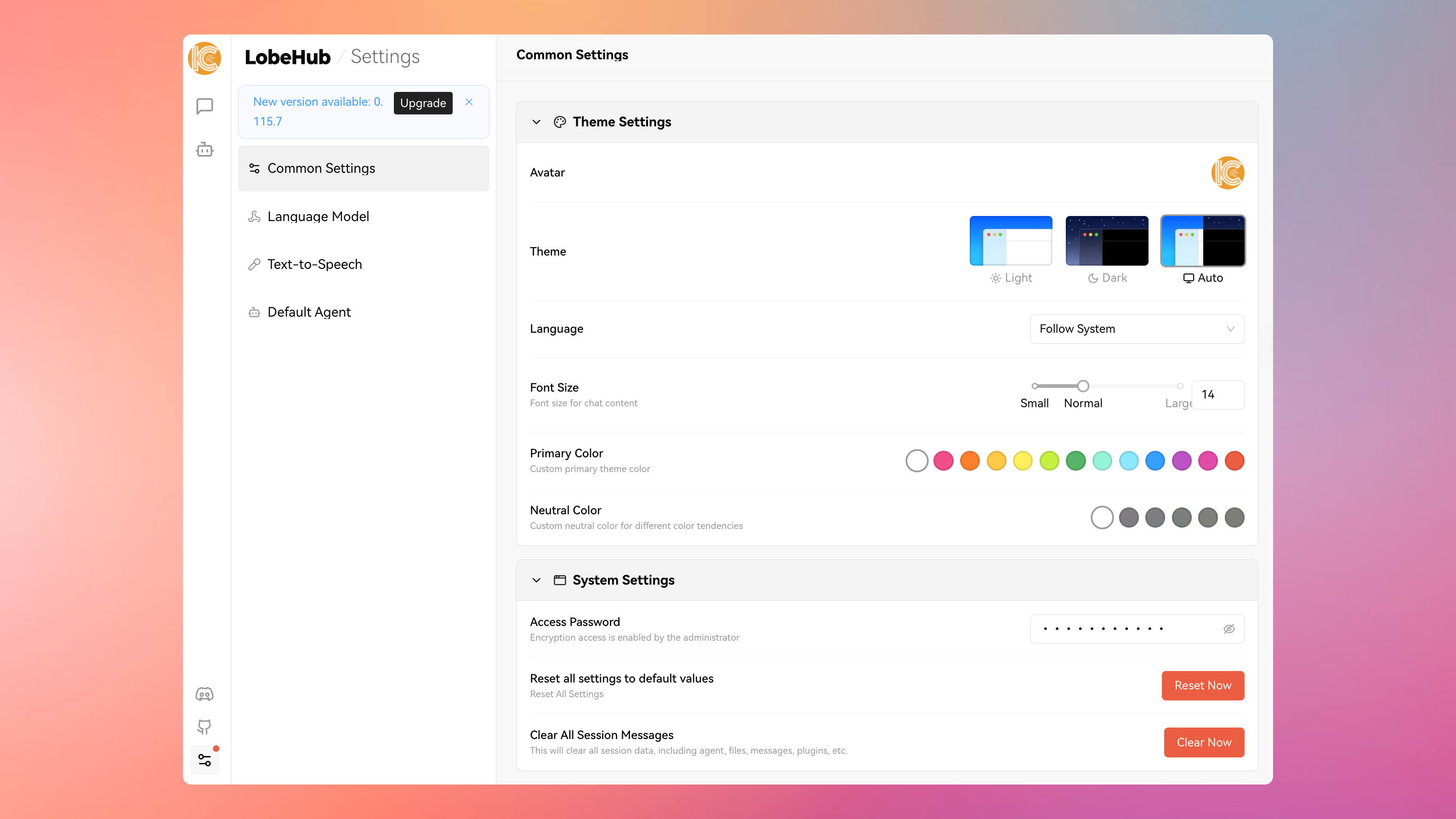

Using LobeChat

Before your first visit, you need to enter the Access Key, which is the environment variable set during Docker startup, your_access_key. This can be entered in Setting > Common Setting > System Setting, or you will be prompted to enter it during your first use.

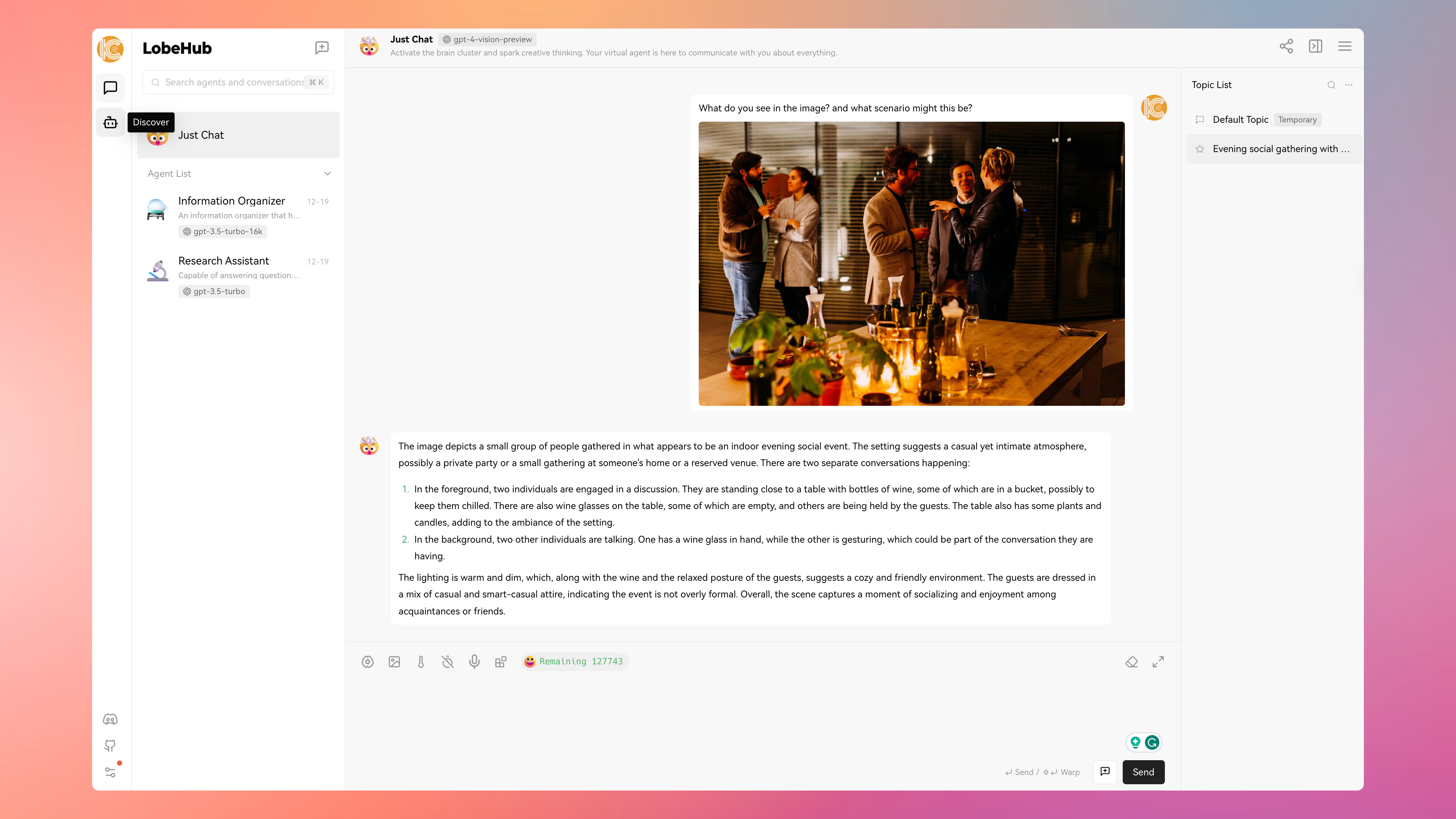

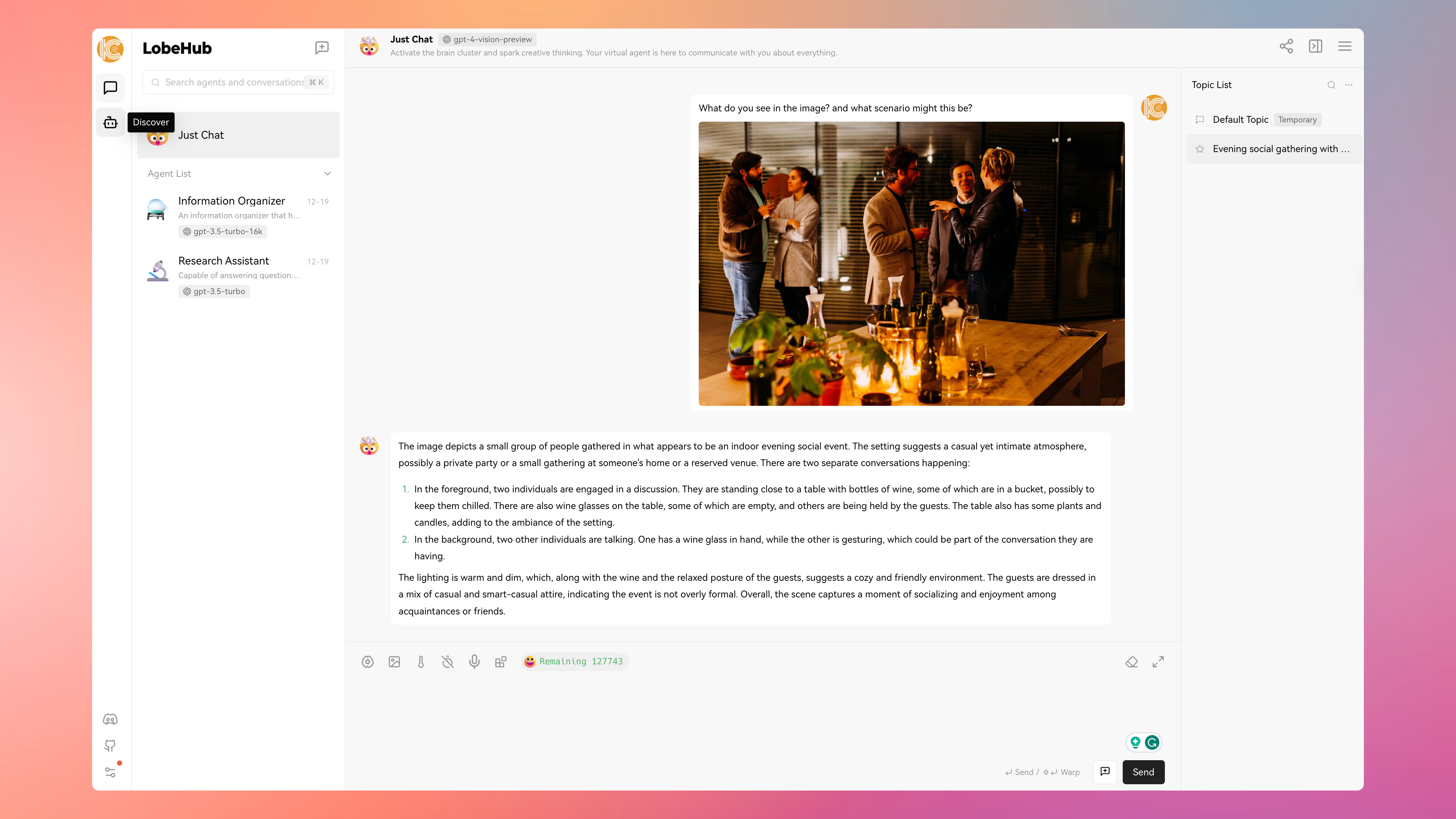

You can select different models during conversations. For example, here I chose gpt-4-vision-preview.

| Usage | Model Progress Button |

|---|---|

| Daily conversations, translation plugins, YouTube summaries/search summaries plugins, etc. | gpt-3.5-turbo |

| Scenarios requiring higher reasoning abilities, like writing code, assisting in writing papers, etc. | gpt-4 |

| Scenarios where the model needs to receive images and answer related questions | gpt-4-vision |

4K, 8K, 16K, 32K

These are abbreviations for token lengths: 4K means 4096 tokens, and so on. Notably, one Chinese character is approximately equivalent to 2 tokens. Therefore, a 4K interface can process about 2000 Chinese characters, and this scales up with the token length.

When I tested by asking about the content of a picture, it made me think that AI will become inseparable from our lives in the future, especially with devices like smart glasses integrated with GPT models. For instance, you could ask it for the name of a person in front of you, their preferences, or recent updates on their social networks. What once was considered “science fiction” is no longer just fiction.

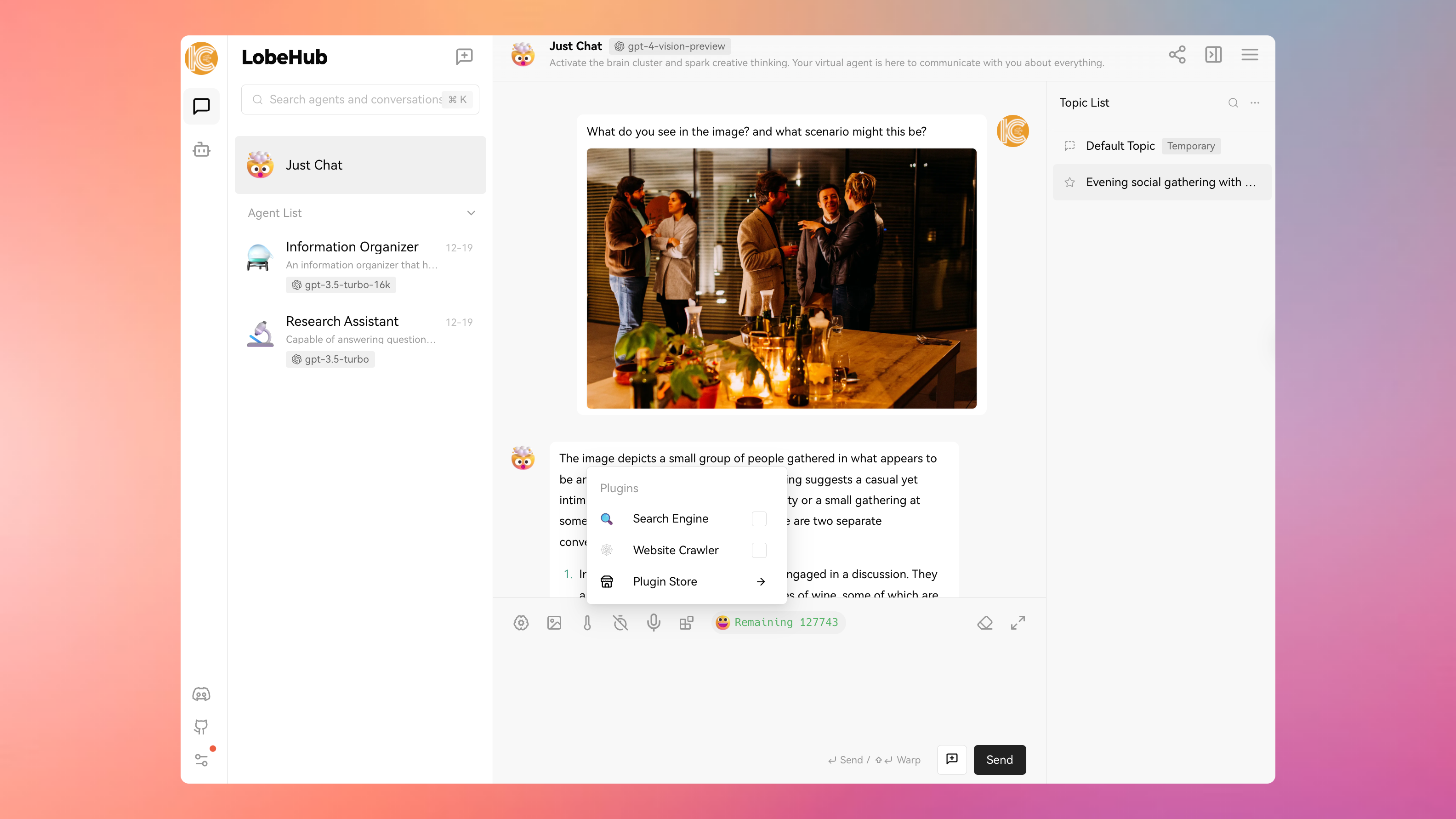

Plugins

Plugins have always been an essential aspect for me as a CAD user. They serve as an excellent way to extend existing functionalities or narrow down the application scope. You can invoke plugins by clicking the last icon in the conversation menu. Moreover, you can also develop your own plugins.

For further exploration and information, refer to the official documentation, which is frequently updated by the author.

If you have deployed using Docker, updating requires pulling the new image and rerunning Docker. Essentially, this process involves two main steps: